Neuronska mreža širenja unapred — разлика између измена

Садржај обрисан Садржај додат

. |

(нема разлике)

|

Верзија на датум 22. март 2024. у 23:31

Један корисник управо ради на овом чланку. Молимо остале кориснике да му допусте да заврши са радом. Ако имате коментаре и питања у вези са чланком, користите страницу за разговор.

Хвала на стрпљењу. Када радови буду завршени, овај шаблон ће бити уклоњен. Напомене

|

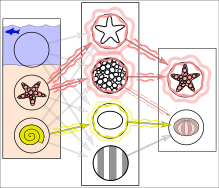

Simplified example of training a neural network in object detection: The network is trained by multiple images that are known to depict starfish and sea urchins, which are correlated with "nodes" that represent visual features. The starfish match with a ringed texture and a star outline, whereas most sea urchins match with a striped texture and oval shape. However, the instance of a ring textured sea urchin creates a weakly weighted association between them.

Subsequent run of the network on an input image (left):[1] The network correctly detects the starfish. However, the weakly weighted association between ringed texture and sea urchin also confers a weak signal to the latter from one of two intermediate nodes. In addition, a shell that was not included in the training gives a weak signal for the oval shape, also resulting in a weak signal for the sea urchin output. These weak signals may result in a false positive result for sea urchin.

In reality, textures and outlines would not be represented by single nodes, but rather by associated weight patterns of multiple nodes.

In reality, textures and outlines would not be represented by single nodes, but rather by associated weight patterns of multiple nodes.

Neuronska mreža širenja unapred (feedforward neural network, FNN) is one of the two broad types of artificial neural network, characterized by direction of the flow of information between its layers.[2] Its flow is uni-directional, meaning that the information in the model flows in only one direction—forward—from the input nodes, through the hidden nodes (if any) and to the output nodes, without any cycles or loops,[2] in contrast to recurrent neural networks,[3] which have a bi-directional flow. Modern feedforward networks are trained using the backpropagation method[4][5][6][7][8] and are colloquially referred to as the "vanilla" neural networks.[9]

Hronologija

- In 1958, a layered network of perceptrons, consisting of an input layer, a hidden layer with randomized weights that did not learn, and an output layer with learning connections, was introduced already by Frank Rosenblatt in his book Perceptron.[10][11][12] This extreme learning machine[13][12] was not yet a deep learning network.

- In 1965, the first deep-learning feedforward network, not yet using stochastic gradient descent, was published by Alexey Grigorevich Ivakhnenko and Valentin Lapa, at the time called the Group Method of Data Handling.[14][15][12]

- In 1967, a deep-learning network, using stochastic gradient descent for the first time, was able to classify non-linearily separable pattern classes, as reported Shun'ichi Amari.[16] Amari's student Saito conducted the computer experiments, using a five-layered feedforward network with two learning layers.

- In 1970, modern backpropagation method, an efficient application of a chain-rule-based supervised learning,[17][18] was for the first time published by the Finnish researcher Seppo Linnainmaa.[4][19][12] The term (i.e. "back-propagating errors") itself has been used by Rosenblatt himself,[11] but he did not know how to implement it,[12] although a continuous precursor of backpropagation was already used in the context of control theory in 1960 by Henry J. Kelley.[5][12] It is known also as a reverse mode of automatic differentiation.

- In 1982, backpropagation was applied in the way that has become standard, for the first time by Paul Werbos.[7][12]

- In 1985, an experimental analysis of the technique was conducted by David E. Rumelhart et al..[8] Many improvements to the approach have been made in subsequent decades.[12]

- In 1987, using a stochastic gradient descent within a (wide 12-layer nonlinear) feed-forward network, Matthew Brand has trained it to reproduce logic functions of nontrivial circuit depth, using small batches of random input/output samples. He, however, concluded that on hardware (sub-megaflop computers) available at the time it was impractical, and proposed using fixed random early layers as an input hash for a single modifiable layer.[20]

- In 1990s, an (much simpler) alternative to using neural networks, although still related[21] support vector machine approach was developed by Vladimir Vapnik and his colleagues. In addition to performing linear classification, they were able to efficiently perform a non-linear classification using what is called the kernel trick, using high-dimensional feature spaces.

- In 2003, interest in backpropagation networks returned due to the successes of deep learning being applied to language modelling by Yoshua Bengio with co-authors.[22]

- In 2017, modern transformer architectures were introduced.[23]

Reference

- ^ Ferrie, C.; Kaiser, S. (2019). Neural Networks for Babies. Sourcebooks. ISBN 1492671207.

- ^ а б Zell, Andreas (1994). Simulation Neuronaler Netze [Simulation of Neural Networks] (на језику: German) (1st изд.). Addison-Wesley. стр. 73. ISBN 3-89319-554-8.

- ^ Schmidhuber, Jürgen (2015-01-01). „Deep learning in neural networks: An overview”. Neural Networks (на језику: енглески). 61: 85–117. ISSN 0893-6080. PMID 25462637. S2CID 11715509. arXiv:1404.7828

. doi:10.1016/j.neunet.2014.09.003.

. doi:10.1016/j.neunet.2014.09.003.

- ^ а б Linnainmaa, Seppo (1970). The representation of the cumulative rounding error of an algorithm as a Taylor expansion of the local rounding errors (Masters) (на језику: фински). University of Helsinki. стр. 6–7.

- ^ а б Kelley, Henry J. (1960). „Gradient theory of optimal flight paths”. ARS Journal. 30 (10): 947–954. doi:10.2514/8.5282.

- ^ Rosenblatt, Frank. x. Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms. Spartan Books, Washington DC, 1961

- ^ а б Werbos, Paul (1982). „Applications of advances in nonlinear sensitivity analysis” (PDF). System modeling and optimization. Springer. стр. 762–770. Архивирано (PDF) из оригинала 14. 4. 2016. г. Приступљено 2. 7. 2017.

- ^ а б Rumelhart, David E., Geoffrey E. Hinton, and R. J. Williams. "Learning Internal Representations by Error Propagation". David E. Rumelhart, James L. McClelland, and the PDP research group. (editors), Parallel distributed processing: Explorations in the microstructure of cognition, Volume 1: Foundation. MIT Press, 1986.

- ^ Hastie, Trevor. Tibshirani, Robert. Friedman, Jerome. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. Springer, New York, NY, 2009.

- ^ Rosenblatt, Frank (1958). „The Perceptron: A Probabilistic Model For Information Storage And Organization in the Brain”. Psychological Review. 65 (6): 386–408. CiteSeerX 10.1.1.588.3775

. PMID 13602029. S2CID 12781225. doi:10.1037/h0042519.

. PMID 13602029. S2CID 12781225. doi:10.1037/h0042519.

- ^ а б Rosenblatt, Frank (1962). Principles of Neurodynamics. Spartan, New York.

- ^ а б в г д ђ е ж Schmidhuber, Juergen (2022). „Annotated History of Modern AI and Deep Learning”. arXiv:2212.11279

[cs.NE].

[cs.NE].

- ^ Huang, Guang-Bin; Zhu, Qin-Yu; Siew, Chee-Kheong (2006). „Extreme learning machine: theory and applications”. Neurocomputing. 70 (1): 489–501. CiteSeerX 10.1.1.217.3692

. S2CID 116858. doi:10.1016/j.neucom.2005.12.126.

. S2CID 116858. doi:10.1016/j.neucom.2005.12.126.

- ^ Ivakhnenko, A. G. (1973). Cybernetic Predicting Devices. CCM Information Corporation.

- ^ Ivakhnenko, A. G.; Grigorʹevich Lapa, Valentin (1967). Cybernetics and forecasting techniques. American Elsevier Pub. Co.

- ^ Amari, Shun'ichi (1967). „A theory of adaptive pattern classifier”. IEEE Transactions. EC (16): 279-307.

- ^ Rodríguez, Omar Hernández; López Fernández, Jorge M. (2010). „A Semiotic Reflection on the Didactics of the Chain Rule”. The Mathematics Enthusiast. 7 (2): 321–332. S2CID 29739148. doi:10.54870/1551-3440.1191

. Приступљено 2019-08-04.

. Приступљено 2019-08-04.

- ^ Leibniz, Gottfried Wilhelm Freiherr von (1920). The Early Mathematical Manuscripts of Leibniz: Translated from the Latin Texts Published by Carl Immanuel Gerhardt with Critical and Historical Notes (Leibniz published the chain rule in a 1676 memoir) (на језику: енглески). Open court publishing Company. ISBN 9780598818461.

- ^ Linnainmaa, Seppo (1976). „Taylor expansion of the accumulated rounding error”. BIT Numerical Mathematics. 16 (2): 146–160. S2CID 122357351. doi:10.1007/bf01931367.

- ^ Matthew Brand (1988) Machine and Brain Learning. University of Chicago Tutorial Studies Bachelor's Thesis, 1988. Reported at the Summer Linguistics Institute, Stanford University, 1987

- ^ R. Collobert and S. Bengio (2004). Links between Perceptrons, MLPs and SVMs. Proc. Int'l Conf. on Machine Learning (ICML).

- ^ Bengio, Yoshua; Ducharme, Réjean; Vincent, Pascal; Janvin, Christian (март 2003). „A neural probabilistic language model”. The Journal of Machine Learning Research. 3: 1137—1155.

- ^ Geva, Mor; Schuster, Roei; Berant, Jonathan; Levy, Omer (2021). „Transformer Feed-Forward Layers Are Key-Value Memories”. Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. стр. 5484—5495. S2CID 229923720. doi:10.18653/v1/2021.emnlp-main.446.